Unrendering triangle meshes with gradient descent

Over the past few weeks I have been exploring a new technique for training triangle meshes from 3D models. The basic idea is to render a test image, evaluate it by computing the mean squared error between the test image and a reference, and then apply gradient descent in order to minimise the error.

A simple model

For the above demonstration I have used a simple model, with a single triangle, a foreground color, and a background color.

let model = {

backgroundColor: [0.25, 0.25, 0.25],

forgroundColor: [0.75, 0.75, 0.75],

vertices: [

-0.5, -0.5,

0.5, -0.5,

0.0, 0.5

]

};

Rendering this base model yeilds the following:

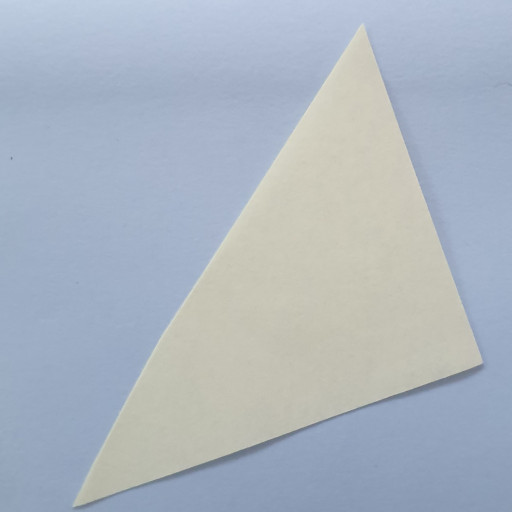

In order to compute the error of a model, we render the test image into a texture

and then compare it with the reference image in a shader:

In order to compute the error of a model, we render the test image into a texture

and then compare it with the reference image in a shader:

// Fragment Shader

precision highp float;

varying highp vec2 v_texture_coord;

uniform sampler2D u_hypothesis;

uniform sampler2D u_experience;

void main() {

vec4 experience = texture2D(u_experience, v_texture_coord);

// WebGL inverts the framebuffer so we compensate in the shader

vec2 hypothesis_coords = vec2(v_texture_coord.x, 1.0 - v_texture_coord.y);

vec4 hypothesis = texture2D(u_hypothesis, hypothesis_coords);

vec4 e = experience - hypothesis;

gl_FragColor = vec4(e.x*e.x, e.y*e.y, e.z*e.z, 1.0);

}

Here experience refers to the test image, and hypothesis refers to the model.

This will yeild an error map:

We then copy the pixel values out of WebGL using

We then copy the pixel values out of WebGL using gl.readPixels and compute

mean squared error in javascript:

let error = pixels.reduce((a, b) => (a + (b/255.0)))/(width * height * 3);

The goal here is now to tweak the properties of the model (forground colour,

background colour and vertex positions), in order to minimise the error calculated above.

We do this using gradient descent, the basic idea behind gradient descent is to train

one attribute at a time, by taking the derivative of the cost function, multiplying

it by a trainingRate and then subtracting that from the original value:

newValue = oldValue - trainingRate * derivative(oldValue)

Even the simple renderer used here is too for an analytical approach to be feasable, but the derivative can be trivially aproximated by rendering the model twice, adding a small delta in between. With a sufficently small delta, this should be a decent aproximation of the derivative. The new formula is as follows:

newValue = oldValue - trainingRate * (newError - oldError)/delta

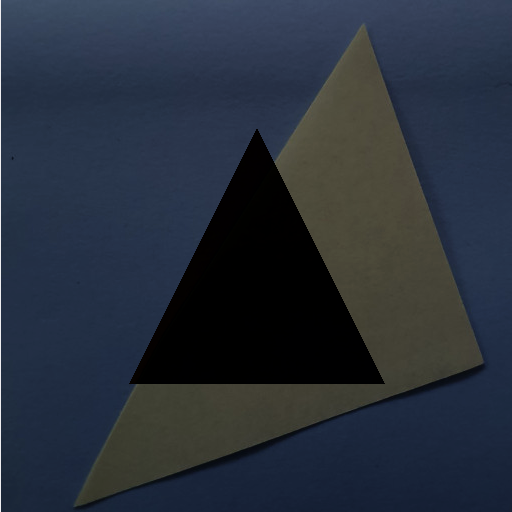

If we apply this to each vertex coordinate and each component of the foreground and

background colours, then after several iterations we should see the model converge

to something like this: